Welcome! This post starts my Cisco Certified Network Professional (CCNP) Enterprise Core (ENCOR) series.

I am here to break down the components of the ENCOR exam blueprint to help others studying for the exam. These posts are not intended to be enough detail to pass the exam. The intention is to supplement other learning materials and have fun learning the concepts. I have found that practising the concepts in labs and finding ways to link technical concepts to memorable scenarios is how I remember the theory enough to pass the certification exams.

I will publish these posts as I study for the CCNP exam myself. I hope you find as much benefit from them as I do.

The first item on the blueprint is design principles in enterprise networks, so here we go with part 1 of Enterprise Network Design.

Fabric Capacity planning

When designing any network, several considerations need to be taken into account. The answers to the questions below will form the basis of your decisions for hardware, and physical topologies and ultimately determine the cost of your network. This step is highly advantageous if done before purchasing any hardware as you may find once you have done some digging that you need different hardware.

Before you start that network diagram ask yourself the below questions.

Where are my endpoints?

What is the physical location of your endpoints? This helps you determine where you would be installing switches and where you need to ensure you have uplinks and the required cabling.

How many endpoints do we need in that location?

This helps you determine the number of switch ports per switch and will help you determine the number of switches needed in a particular location.

✅ When calculating the number of switch ports you need, do not forget the uplink ports!

Do endpoints need Power over Ethernet (PoE)?

Not only does PoE increase the cost of an individual switch, you need to ensure the switch hardware is capable of powering the number of devices you need. Switches have power budgets which limit the number of ports available for PoE. This should be checked against the device datasheet and the manufactures website.

What bandwidth do I need? and What is the expected utilisation of the links?

Bandwidth planning is super important! Whilst businesses will be conscious of how much WAN or internet bandwidth they have (usually because it’s paid per Megabit), LAN bandwidth is often forgotten. Bandwidth is important inside the LAN, there’s really not much point in having 1Gb internet if your LAN maxes out at 100Mb.

The links on your switches must be capable of what you need them for. Uplinks, for example, need to be able to withstand the traffic that is expected. Understanding traffic flow is critical here, otherwise, there may be unexpected problems when your endpoints begin sending traffic.

How critical is that area of the network?

Now, I know everyone will tell you the whole network is critical, and they cannot afford for it to go offline. In reality, however, most businesses will have areas in their network that are not as critical as others. This is where you can potentially make your money stretch further. Understanding the most critical components of the infrastructure will help you determine the level of redundancy needed in that part of the network.

📝 An Example

The C-level offices – this is likely a very critical connection.

The kitchen and chill-out area provides some wired connections and wireless connections – this may be less critical, because the business may want colleagues talking in the kitchen rather than working.

Knowing the business requirements here is very important, you should ask the stakeholders about these things prior to designing the network.

Traffic Flows – North-South or East-West?

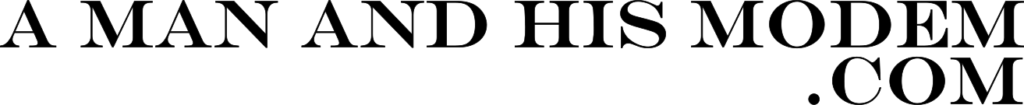

The flow of traffic is an important aspect when designing a network. You may hear the terms north-south and east-west. These terms are used to describe if traffic is flowing up and down the network or horizontally. In network diagrams, servers and end devices are typically placed at the bottom of the page, whilst the internet or external networks are placed at the top. An example of north-south traffic would be end devices to the internet, whereas east-west would be a client-to-server or server-to-server.

Below is a little illustration to solidify these terms in your head.

Knowing the amount of traffic flowing in a particular direction will allow you to design and determine the correct sized hardware based on traffic requirements.

So once you have done some capacity planning, it’s time to look at the network architecture.

💡 Wireless planning has been left out on purpose here. I will dig deeper into WLAN design in another post.

Physical Network Architectures

By starting at the physical layer of our good friend, the OSI Model, you can be sure that you meet the requirements determined as part of your capacity planning. If you design the routing first, you may hit some physical barriers you did not think about or were not found during the initial planning stage. A common problem I have seen is engineers misjudging the rack space required to house the switch, or a switch chassis is too big for the rack. This is a fun problem to have after taking the network offline!

Typically, there are two architecture topologies you will see inside campus networks. I am not going into datacenter just yet. The first is the three-tier architecture, probably the most common architecture you will see in a larger enterprise. The second is the two-tier or collapsed core architecture, which you will probably see in mid-size and small businesses.

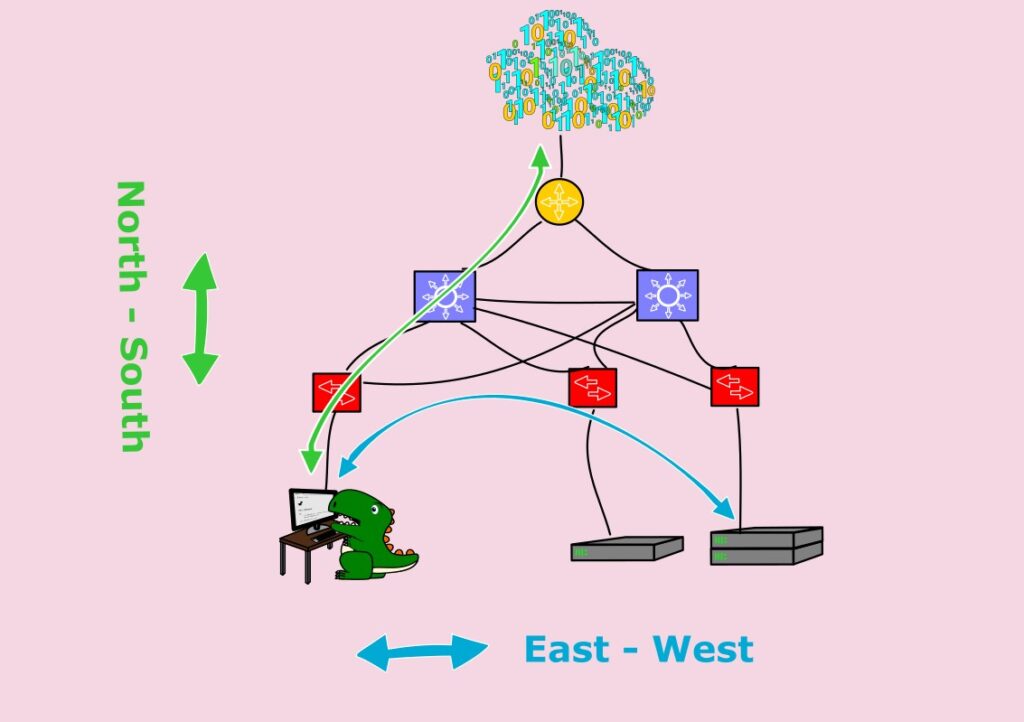

Three Tier Architecture

⚠️ Don’t confuse this with the Three Tier Architecture defined in application development.

The three-tier architecture was designed for modularity but can be expensive. As you can see from the diagram, this architecture is what it says on the tin, three-tiered. You have the Core, Distribution and Access layers, all of which have specific functions.

Core Layer (aka Backbone)

The core layer is built of higher-end (expensive) switches and is a critical part of your infrastructure. The purpose of the core layer is to provide high-speed switching, reliability and fault tolerance in the network. You connect your distribution (aka aggregation switches) to the core switch. As you require more capacity, you add a cheaper distribution switch, allowing you to scale horizontally quickly. You usually want redundancy in the core, so at least two switches are recommended, depending on the size of your network.

⚠️ Remember, the core is meant to be fast! Avoid putting CPU-intensive processes such as packet inspection or QoS classification on the core layer. Save that for the access layer.

Distribution-Access Block

Cisco, amongst others, combines the distribution and access layer into a distribution block. This logical grouping allows you to view the block as a module extension of the network. Blocks can be added and removed easily without affecting the current network.

Distribution Layer

Distribution switches are also called aggregation switches, which is their job; they aggregate the access switches. Another use case for distribution switches is to connect any server infrastructure.

💡 The general rule is to put heavy east-west traffic devices onto the distribution layer

Generally speaking, the distribution layer contains more capable switches. These control your routing between VLANs, QoS, and security filtering via ACLs. It is usually thought of as the broadcast domain demarcation point.

Access Layer

The access layer is where all your endpoints should connect. It is usually the least critical in terms of redundancy. Here, you find features such as QoS classification, Power over Ethernet, DHCP Snooping. and 801.1x Port security. These switches are generally cheaper models only capable of Layer 2 switching and do not have the capability of routing at Layer 3.

✅ Keep complexity at the access edge

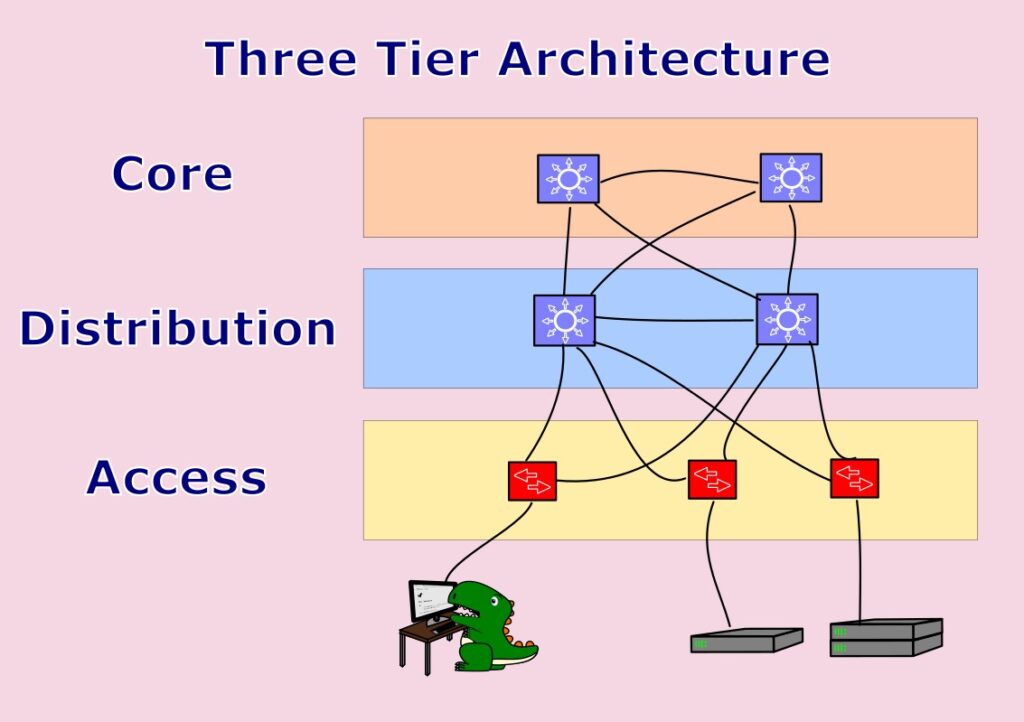

Two Tier or Collapsed Core

The collapsed core architecture is once again exactly what it says on the tin. The core and distribution layers are combined into a single layer. There are obvious advantages to this, Like cost! You remove the two beefy, expensive core switches. There is also less to manage, helping with operational costs. However, there are some pretty major downsides :

- Lack of modularity – Every time you need to scale and add a distribution switch, you need to link all existing access switches back to the new distribution switch and reconfigure each switch accordingly.

- Limited Isolation – There is limited isolation from the core network. For example, server domains are not kept to a single pair of distribution switches, and east-west traffic traverses the core. This can impact the stability of the core network when making changes.

- Change control becomes more difficult. Just imagine, that even a simple VLAN change would need to traverse the core (because the core network is not routed) and could impact Spanning Tree, resulting in unforeseen outages.

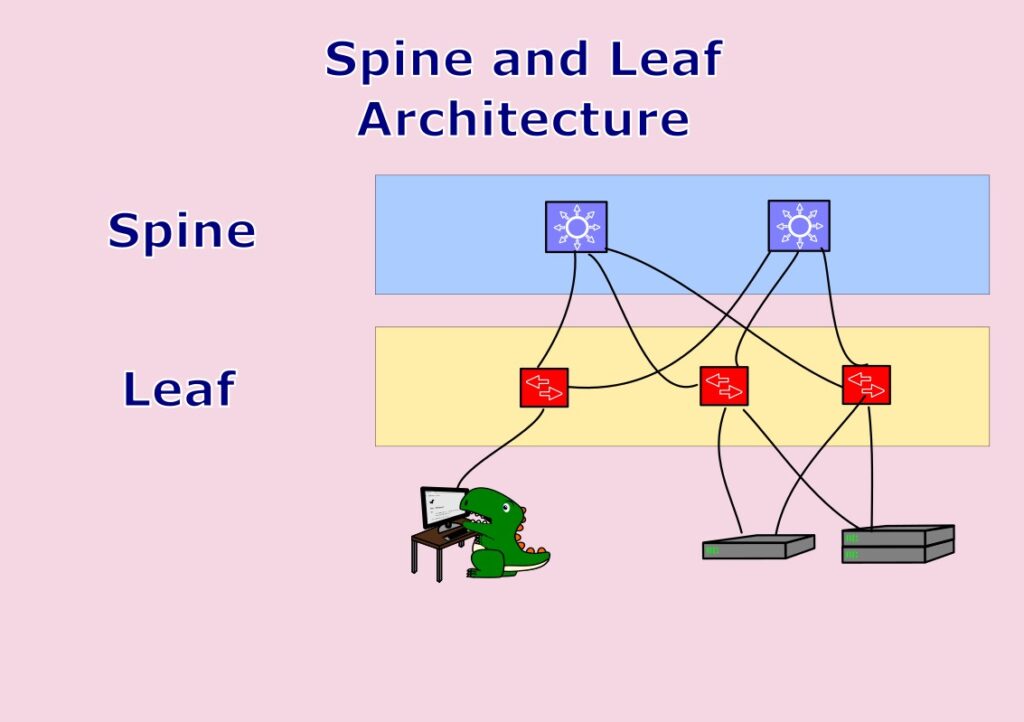

Spine and Leaf Architecture

What’s this? A third architecture.

Spine and Leaf Architectures are becoming increasingly popular, and you will see a fair amount of this design in datacenters. The increase in popularity is due to the evolution of high-traffic virtualisation platforms.

Now, if you look at the diagram, you may think it looks exactly the same as the collapsed core architecture, and you would not be wrong. They do look similar. However, there are some subtle differences which make this a popular choice.

Check out the Spine switches. They are not connected together except by using the leaf switches. Each link to a leaf switch is a redundant connection and allows us to use a concept called equal-cost multipath (ECMP). Because of ECMP, this architecture is perfect for east-west traffic.

The Spine and Leaf architecture was based on the Clos network, a non-blocking telephone switching network developed in the 1930s and formalised in the 1950s by Charles Clos. Some people use Clos as another name for Spine and Leaf. They are not the same thing, so be sure you’re actually talking to someone about network architecture and not some old telephones.

💡 If you are interested in the clos network, here’s a link to the Wikipedia site – https://en.wikipedia.org/wiki/Clos_network

OK, enough topology talk for now. As you can see, we have a fair bit to consider when designing a network. Once you have your physical topology set, We can move on to Part 2, the OSI Layer 2 and Layer 3 Design.